Building Your AI Advisory Board

The Critical Roles You’re Missing

The swift expansion of AI technologies, from generative models like the GPT series to machine learning algorithms that allow predictive analytics to take off, has revolutionized the way organizations run their businesses across all capable areas.

According to PwC, by 2030 AI could contribute as much as $15.7 trillion to the global economy, provided that organizations manage it well.

An AI advisory board is a group of experts from multiple disciplines, typically external to the organization, intended to provide valuable -- yet nonbinding -- advice on AI strategy, ethics and implementation. Unlike the organization’s internal AI teams who are intended to focus on the execution of the organization’s strategy, the advisory board members provide high-level oversight of the organization’s use of AI, both addressing and satisfying the social obligation aspects of deploying AI within the business.

Why is that important? The statistics indicate another troubling reality: Bain & Company said that 35% of organizations fail at AI because of poor governance. While there are many reasons for that, most of them step from the lack of diverse expertise at the board level. If we look specifically to the health industry, for example, Advisory Board’s case studies of organizations that have put an AI governance structure in place found teams were able to reach solution decisions 20% faster than teams not governed, while compliant with mandatory regulations like HIPAA.

IBM’s AI Ethics Board was instrumental for promoting ethical AI practices and preventing reputational risks. Without the presence of broad-based ethical oversight, organizations risk having siloed decision-making, where the organization’s technical expertise clouds the importance of meeting obligations not only socially but ethically as well.

For most leaders in finance, manufacturing or tech, creating an AI advisory board is not only a necessity in measuring competitiveness with others, but it is far overdue. In an era when 84% of S&P 500 companies now report some sort of AI oversight, and significantly more than in prior years, creating an AI advisory board is fundamental for organizational AI-implemented initiatives to provide sustained value while balancing innovation with accountability.

Selecting the Right Mix of Expertise for Alignment

To build an AI advisory board you should begin with a clear assessment of your firm’s AI maturity and goals. Then ask the right questions.

Are you focusing on operational efficiencies, like automating supply chains, or transformative applications, such as personalized customer experiences?

Deloitte’s AI Board Governance Roadmap sensibly recommends starting with a gap analysis: evaluate current board competencies against AI demands, then recruit to fill voids.

The ideal mix includes technical AI specialists, ethical philosophers, legal experts, and business strategists. For example, aim for 4-7 members to maintain agility. Egon Zehnder advises embracing continuous learning through external expertise, such as inviting academics or industry veterans. Strategic alignment means tying board input to key performance indicators (KPIs) like ROI on AI projects or risk mitigation metrics.

Practical steps include:

Conducting a Skills Inventory: Use tools like those from NACD to benchmark board AI fluency.

Diversifying Perspectives: Include voices from underrepresented groups to combat bias, as highlighted in Stanford HAI reports.

Integrating with Executive Teams: Ensure the board advises on but doesn’t overlap with operational roles, fostering collaboration.

Case study: Microsoft’s AI governance approach, informed by advisory input, has led to ethical frameworks that support 25% of its AI hires through partnerships. By prioritizing this mix, firms can achieve 3-5x productivity gains from AI, per PwC data.

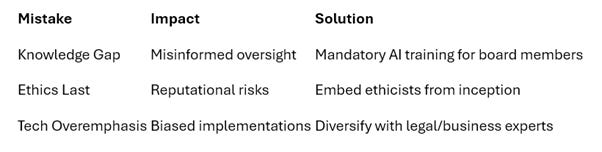

Pitfalls in AI Team Composition and How to Overcome Them

Despite growing awareness, many organizations falter in composing AI advisory boards.

A common error is the “knowledge gap,” where over half of boards lack AI fluency, leading to misinformed decisions.

Another is treating AI as a purely technical domain, ignoring cultural alignment, resulting in resistance or ethical oversights, as noted in Medium analyses.

Some of these frequent mistakes include:

Tech-Centric Focus: Overemphasizing engineers while neglecting ethics, causing issues like biased algorithms (e.g., 35,445 AI job postings emphasize tech skills but undervalue governance).

Governance as an Afterthought: Failing to integrate ethics early, per Cognizant, leading to regulatory fines.

Lack of Clear Objectives: Diving into AI without defined problems, wasting resources (Forbes highlights this as a top mistake).

Possible solutions: Implement education programs, like Google’s AI Essentials course, which boosted project speed by 20%. Conduct culture audits and use decision matrices for build/buy/partner strategies. By addressing these, companies like Netflix have retained talent through adapted “keeper tests,” achieving 40-60% internal fulfilment.

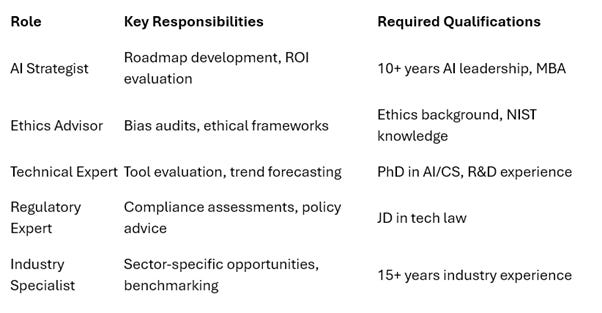

Role Descriptions: Essential Positions for a Robust AI Advisory Board

In order to fill the gaps, consider these key roles, each with detailed responsibilities drawn from IAPP and DHS frameworks.

AI Strategist:

Leads alignment of AI initiatives with business goals.

Responsibilities: Develop roadmaps, evaluate ROI, advise on scalability.

Qualifications: 10+ years in AI leadership, MBA or equivalent.

Example: Oversees projects like IBM’s upskilling, reducing external hires by 15%.Ethics Advisor:

Ensures responsible AI deployment, addressing bias and transparency.

Duties: Conduct audits, recommend frameworks, monitor societal impacts.

Skills: Background in philosophy or ethics, familiarity with NIST AI Risk Management.

Example: Similar to OpenAI’s safety council, preventing ethical breaches.Technical Expert:

Provides deep insights into AI technologies like NLP and ML.

Role: Evaluate tools, advise on integration, forecast trends.

Requirements: PhD in CS/AI, experience in R&D.

Impact: Drives innovations as in Microsoft’s partnerships.Regulatory/Legal Expert:

Navigates compliance with laws like GDPR or upcoming AI Acts.

Tasks: Risk assessments, policy recommendations.

Expertise: JD with focus on tech law.

Example: Helps avoid fines, as in EY’s board support strategies.Industry Domain Specialist:

Tailors AI advice to sector-specific needs (e.g., healthcare, finance).

Responsibilities: Identify opportunities, benchmark against peers.

Qualifications: 15+ years in industry, AI application knowledge.

Benefit: Enhances relevance, per Fortune’s AIQ 50.

These roles form a cohesive unit, with the board meeting quarterly to review progress.

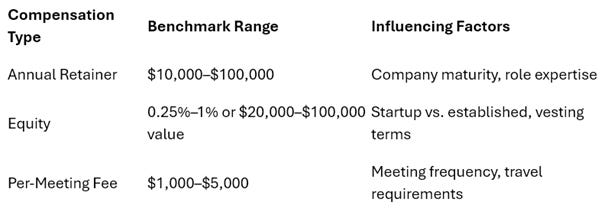

Compensation & Attracting Top Talent in a Competitive Market

Securing elite advisors requires competitive compensation, especially amid the AI talent crunch where engineers earn up to $917,000 annually. For advisory roles, which are part-time, benchmarks vary by company stage and role complexity.

According to IAPP’s 2025-26 Salary Report, AI governance professionals average $190,000 base, with medians at $151,800 for specialized roles. Advisory board members typically receive:

Annual Retainers: $10,000–$50,000 for established companies; higher ($50,000–$100,000) in tech/AI firms due to demand.

Equity Grants: 0.25%–1% for startups, vesting over 2-4 years; mature firms offer stock options valued at $20,000–$100,000.

Per-Meeting Fees: $1,000–$5,000, plus expenses.

There are several factors influencing pay, such as experience (senior roles command 20-30% premiums), location (U.S. tech hubs like San Francisco add 15-20%), and certifications (IAPP-qualified earn higher medians).For example, AI executives near $2 million total comp, but advisors scale to 10-20% of that for fractional commitments.

By offering these packages, firms like those in Equilar’s analysis attract top talent, ensuring their AI advisory boards deliver lasting value.

In conclusion, investing in a well-composed AI advisory board positions your firm to thrive amid AI’s transformative power. Leaders who act now will not only mitigate risks but also unlock unprecedented opportunities.